Emerging from Despair

I know have been radio silent online for much of the past few months. I have to be honest, I have been struggling with profound feelings of despair at watching the large scale attacks on civil society these past few months, with the knowledge that we have three-and-a-half years left of this. At least.

As a Librarian, watching the civic, institutional and diplomatic damage of the second Trump administration has been personally gutting. The breadth and deapth of the attacks on government institutions, knowledge, research and public service programs. It has been a non-stop shock-and-awe campaign against anyone and anything that benefits the public good.

The cascading chaos of DOGE, the Big Ugly Bill, and the United States reneging on its domestic and international commitments are the most visible actions that the public is seeing. All of that is outrageous enough. But that's just the tip of the iceberg. What people are not seeing are the countless research projects that are silently being cut off. Some of these projects may find funding from other institutions, or other countries. Some, if not most, will probably just fade into obscurity. We are entering a research dark age.

I'm not speaking purely in hypotheticals, either. One of the most alarming examples are the defunding of incredibly valuable mRNA vaccine research grants. I could list countless others, across a myriad of scientific domains – all of them deserve more visibility advocacy on their own. However, as a Librarian with eye on Trustworthy AI policy, I have to limit my scope.

In particular, I want to highlight research projects that demonstrate tangible promise for implementing Trustworthy AI policies in the future. The bulk of these research project research projects were supported by the National Science Foundation (NSF) and the National Institute of Standards and Technology (NIST).

In 2023, the National Science Foundation was funding the National Artificial Intelligence Research Institutes. There were (are) seven research institutes decidated to various themes:

- Trustworthy AI (TRAILS)

- Intelligent Agents for Next-Generation Cybersecurity (ACTION)

- Climate Smart Agriculture and Forestry (AI-CLIMATE)

- Neural and Cognitive Foundations of Artificial Intelligence (ARNI)

- AI for Societal Decision Making (AI-SDM)

- AI-Augmented Learning to Expand Education Opportunities and Improve Outcomes (INVITE, AI4ExceptionalEd)

In 2024, NIST launched Trustworthy & Responsible Artificial Intelligence Resource Center (AIRC) and its NIST AI Risk Management Framework (AI RMF 1.0). As I learned about these technology and policy frameworks, I got really excited. I even had the pleasure to speak about it at IAC24 and DGIQW 2025.

But already, you can see erasure of of some of these research themes from reviewing changes to the NSF's AI Research Institutes website. In listing its research themes you can see that Trustworthy AI and Climate Science have been de-emphasized. Most grant have been archived indefiniately.

Since then, the Trump Administration has released its AI Action Plan which I will maintain is intentionally vague. It also strategically de-emphasizes and omits the Trustworthy and Sustainability themes of AI research and policy.

From my prospective, this all looks bleak. After some grief, I have to remind myself that we're not starting from zero:

- Most of the research and publications have not been taken down (yet). Much of it is being mirrored elsewhere in case

- Some of the research grants funding is still ongoing, others are finding other sources of funding

- All of the peole behind these projects and papers are still around

- While the US regresses in Trustworthy AI policy, the work of the European Commision, States and other regions moves foward

- This administration is not forever

The feelings of despair are real. They are the product of an intentional campaign. I said Shock and Awe approach and I meant it. DOGE, and everything else along with it, is meant to despirit those who believe in the public good in any way whatsoever. If you feel despair, you are not alone and you are not overreacting. This feeling of isolation and helpeless is the point.

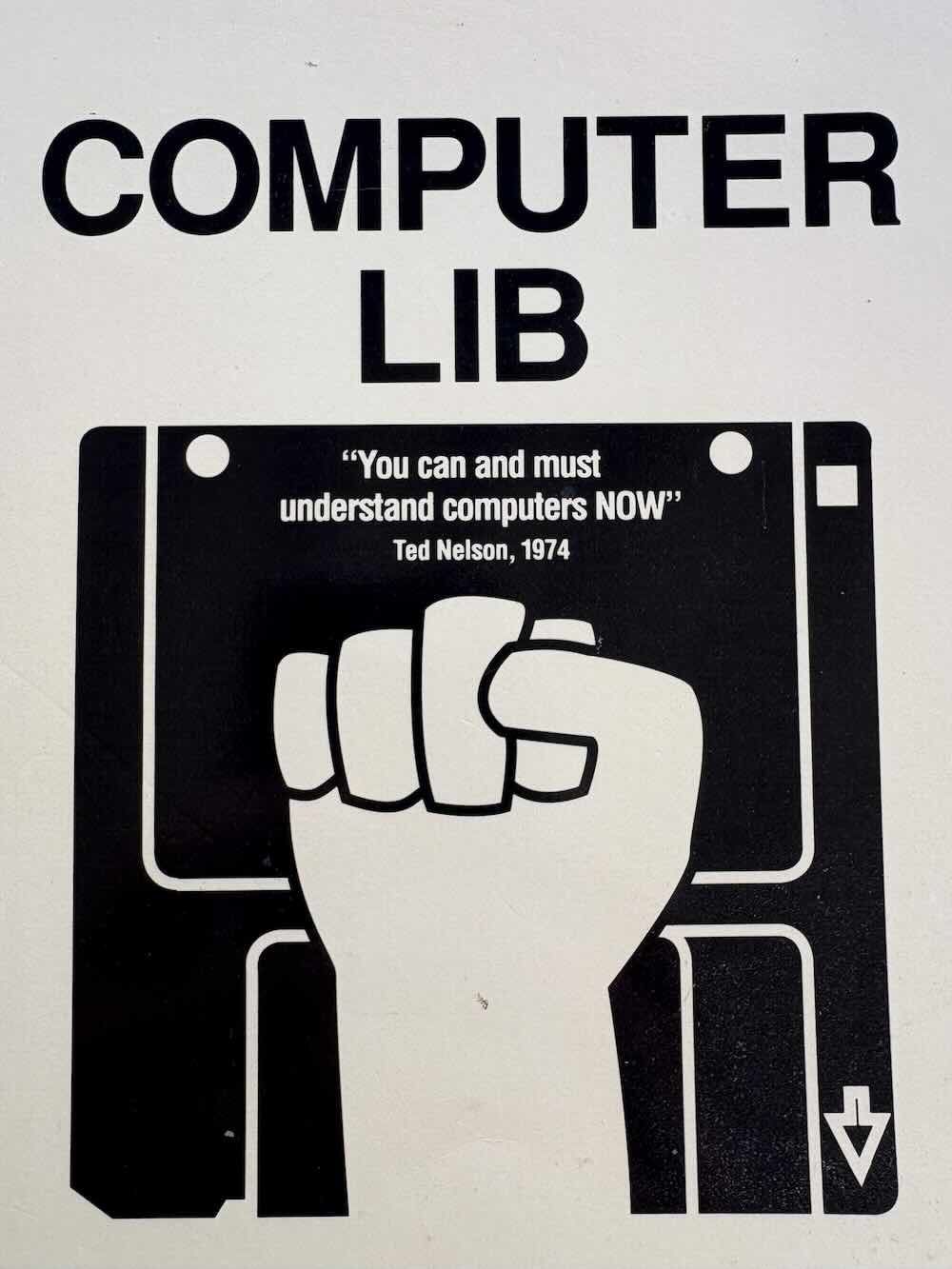

My personal copy of Ted Nelson's Computer Lib. His passion and voice about the importance of information technology as a social concern is a huge inspiration.

As time goes on, I find my despair shifting to anger. I return to the Internet after a Summer hiatus with a sense of intention. I want to find the people, organizations, and papers that are cointinuing to do good work out there. And by good work I mean work that is making search, AI or any information experience on the Internet more Trustworthy.

Voices like Gary Marcus and Ed Zitron have done great work drawing visibility on the larger problems with the current AI hype bubble. I would like to supplement their work by trying to draw more attention to specific technical mechanisms and legal definitions proposed for making generative AI more trustworthy, secure and reliable than it currently is.

Another person whose work I appreciate is Helen Toner, who brings a cybersecurity perspective to analyzing AI technologies. Work from Simon Willison, Kurt Cagle and Jorge Arango have helped me to get hands on experience with large language models that has provided understanding I would not have gathered otherwise. A lot of amazing people are still doing great work out there. I want to stand on the shoulders of giants to inspire mental models for Trustworthy AI as infrastructure, as policy goals, as a work intended for the public good.

Don't listen to the AI intimidation-mongers. You can understand how a Generative AI model works just as you can understand a crystal radio set. This technology is not "above our heads." It's weird, but it's not magic. I've menioned how I feel that a lot of the public messaging about generative AI intentionally obfuscates how the technology works. My frustration (and anger) with this intentional obfuscation sparked a desire to write articles and give presentations as understandable as possible.

In the age of generative AI, I am of the mind that previously arcane issues of Information Science, Information Behavior Theory and Information Literacy have never ever been more culturally important or directly relatable. I aim to tie Trustworthy AI research and policy discussions to real-life, human examples with tangible benefits to the public and our daily lives.

Some topics I want to explore, for example:

- Knowledge graphs, why they're incredibly important (i.e. keeping generative AI models honest and up-to-date), and who is building knowledge graphs as infrastructure for information retrieval of the future.

- The tactics and motivations behind the intentional obfuscation of generative AI technologies in the public discource

- Exploring new technologies trees outside of traditional generative AI stacks, static and dynamic prompting, RAG and model routing (this is currently a gap in my understanding)

- What a post-platform Internet looks like (Protocols Not Platforms!)

- Those documenting the damage to insitutions and preparing for a post-MAGA rebuilding.

As I return from time offline, I look forward to connecting with others and taking part in the conversations to come.

As we fight these attacks on information access and the public good, I will leave off on two music-related notes. I will paraphrase Gord Downie, a personal hero of mine, when I say we should not tolerate these attacks on our institutions, collective knowledge and understanding with any patience, tolerance or restraint.

The destruction is overwhelming, but it is not forever. This song by Descartes a Kant, has been keeping me going. There will be creation after destruction. You'll see.

Let's find each other. Share solidarity. Let's organize and pick up the pieces from these fucking vandals.

- ← Previous

The State of Trustworthy AI Policy - Part 1 of 2 - Next →

I know my website sucks. It's not my fault!

Built with Eleventy and System.css